Computer Vision at the Edge: ARM SoCs

A discussion on how ML on ARM SoCs is closer than many might think

I’ve decided to break the discussion about hardware into two parts: ARM based and x86 based. You may be wondering why I would separate out the hardware based on the CPU instruction set. Well, the way that I see it is that these two instruction sets are tightly bound with the types of GPUs that get applied. Generally speaking, the types of GPUs that I want to talk about in this post are an integral component of the System on a Chip (SoC) of these ARM based devices. Now, whether I should call them ARM based devices is definitely up in the air but it felt like a good distinction to me…

I love hardware and I’ve learned a lot about when to use certain hardware over others which means that I have a lot to say. This is going to be an amalgamation of the knowledge I’ve gained in my experience so that, hopefully, people will make smarter decisions when it comes to their hardware, understand when it’s appropriate to use small form-factor devices, and help their devs help them. If you haven’t already, please check out my other post where I talk about what edge computing is and what questions to ask yourself before getting started.

In the last several years, we’ve seen a boom in machine learning applications in no small part thanks to improvements in hardware. A lot of research went in to training computers to perform tasks such as handwritten digit recognition by Yann LeCun and others in 1989. It wasn’t until around the 2010s that this research became practical for large scale adoption thanks to advances in hardware. Nvidia’s hardware has been the go-to standard for all ML applications lately due to their large investment in targeting their hardware for ML applications. We’re at the point now where consumer grade hardware can run decently large Computer Vision (CV) models at real-time (30 FPS for many cameras) which allows anyone to build their own PC at home and run CV models for their personal use.

In this post, I’m going to talk about various types of hardware available for use in CV applications, their typical use cases, and some drawbacks. As I mentioned in my previous post about questions to ask before beginning to use CV at the edge, “edge” covers a wide range of hardware from embedded devices to servers. Here, we’ll take a look at the different ARM based hardware that is often used in ML and how these devices can be applied to CV tasks.

Smartphones

To kick things off, we’ll start with the edge node that almost everyone has on them at all times: smartphones. Current smartphones have gotten extremely powerful and run all sorts of models. As of writing this, each of the major phone manufacturers in the US (Apple, Google, and Samsung) have deployed extremely powerful CV models for everything from segmenting objects from photos, to enhancing your photo’s HDR, to using computer vision to make photos better represent darker skin tones. Many people don’t realize how close they truly are to computer vision on a daily basis. With countless features in use on a daily basis.

The models that typically get deployed on a smartphone aren’t necessarily the same as the ones that are deployed on consumer hardware. There’s a specific recipe to follow when preparing “mobile” models. This is because the hardware architecture of smartphones is based around the ARM System-on-a-Chip (SoC) design. These SoCs have an ARM based CPU and special APIs for the GPUs. Fortunately, support for mobile devices is currently implemented in TensorFlow (using TFLite) and PyTorch is in beta with their version, PyTorch mobile.

Update: On October 17th, PyTorch announced ExecuTorch which is an exciting new solution for porting models to a variety of edge devices including smartphones, wearables, embedded devices, and more.

The main limitation when implementing CV models on something like a smartphone is the SoC. These are, by design, small form-factor computers that typically need optimization to run models. If we tried to run a full-sized model that we run on an Nvidia RTX 4080, we’ll find that the model will either take ages for each image or the model will fail to run at all. Smartphones generally have fairly limited memory and compute but there are ways that we can optimize our model for smartphones. Two of the big ones are quantization and pruning. These two do a lot of work to make CV models more memory and compute efficient by effectively reducing the amount of data that the device needs to store and compute. I plan on covering these topics in a follow-up post but in short, quantization is where you reduce the precision of your data (e.g. float32 to int8) which in turn reduces the required memory and compute since there are fewer bits in the data. Pruning is where you effectively remove nodes in your model that are less than a certain threshold. Both of these strategies reduce the accuracy of a model but can significantly decrease the memory footprint and the latency. You’ll find that most models on smartphones have one (or both) of these optimizations applied so that users aren’t waiting 20+ seconds to get a result.

All that being said, smartphones may be one sector to closely watch in the future. As phones get more and more powerful and models get more and more efficient, it’s now easier than ever to run custom models on phones for edge inference. Continued growth in this area will make it easier for everyone to expand their ML footprint with just the phones that people carry around with them every day.

Single-board computers (Raspberry Pi/Nvidia Jetson)

When I say single-board computers, I am generally speaking about Raspberry Pi and Nvidia Jetson. These are called single-board computers because the entire system is on a single board (straightforward enough). Here, I’ll speak about the Jetson lineup because that’s specifically designed for ML workloads but there’s no particular reason why CV models couldn’t work on a Raspberry Pi. The setup may be more restricted but in general, any particular optimization for Jetson is going to help in the case of a Pi. My understanding is that the Pi is a more general use board, not specifically tailored for ML but models will still run on CPU.

Note: I will be using “Jetson” as the short-hand for the entire lineup. My experience is using the Jetson Xavier NX so most of this applies specifically to that device. As of writing this (24 Aug 2023), the Xavier NX is roughly in the middle of the Jetson lineup, as far as performance, so you may extrapolate to other devices as you like.

I’ve been fortunate enough to work with the Nvidia Jetson Xavier NX and that is a very surprising piece of hardware. These single-board computers, like the Jetson lineup and Raspberry Pi lineup, are impressive not because of their performance numbers, per se, but because of their power draw. The Nvidia Jetson Xavier NX, for instance, defaults to a power level of 15W and can run un-pruned, mixed float, instance segmentation models around 10-15 FPS, depending on the backbone. This, to me, is bonkers. For reference, a Nvidia RTX 4060 has an idle power draw of 12W. Yes, for roughly the same power as a 4060 that isn’t even doing anything, you can run a freaking full-fledged CV model with extremely reasonable latency. This is enough to be considered real-time in some cases!

This is also effectively a worst-case scenario for a low-power device such as a Jetson. The model I’m talking about, from my own testing, was a Mask-RCNN that used EfficientDet Lite0 as the backbone. EfficientDet Lite0 is one of the smallest and fastest models out there and as such does not necessarily mean the most accurate results. My testing also excluded several strategies to improve performance like reducing input image size, pruning, and quantization. I was also using an instance segmentation model rather than something computationally cheaper such as just object detection.

Let’s get back on topic, though. We’ve talked about one of the more impressive features of the Jetson lineup, namely the ability to run models around real-time with an extremely low power draw. This is no accident. Nvidia has specifically designed these devices to be tiny and require very little power. This makes them extremely well suited for tasks such as automous robots, CV on drones, and anything where you may be on battery or power is a concern but you still want close to real-time inference. This makes the Jetson lineup extremely powerful (and profitable)! They can be placed almost anywhere, with various amounts of power, and run with near desktop performance! There are truly too many applications to cover here but this hardware is an extremely impressive feat and is only getting better. The applications are basically endless and you can find some of Nvidia’s “success stories” here.

This all sounds great and you may be thinking of all the places you could use a Jetson, but one thing that I found people weren’t asking themselves is: “Why shouldn’t I use a Jetson?”. In my experience, people were very quick to throw out the idea of using a Jetson when their project really needed was this hardcore inference server from Lambda Labs. Joking aside, it’s important to know the limitations of a device such as a Jetson before ordering one and forcing your devs to fit to the device.

So when shouldn’t you use a Jetson? In most cases, you shouldn’t use a Jetson if you have the space and power for a full size computer. Optimizing a model to run on a Jetson device currently takes a good amount of effort and you should make sure that you really need it. The Jetson devices also aren’t particularly cheap and you can almost certainly get a much more powerful machine for much less money. Below are some essential questions to ask yourself when considering getting a Jetson.

Do I have limited space or am I planning on putting this on a drone or small robot?

Do I have limited power or am I using a battery that I want to last as long as possible?

Do I need to run at real-time?

What type of model architecture do I need for this use-case to work?

What size model do I need to use for this project to be accurate enough?

What’s my budget?

In my experience, while the Jetson devices are powerful, many people assume them to be a drop-in replacement for a PC with a dedicated GPU. It simply isn’t. It’s a powerful device that sips energy but it shouldn’t be used as your real-time inferencing platform unless you really need it.

Apple Silicon

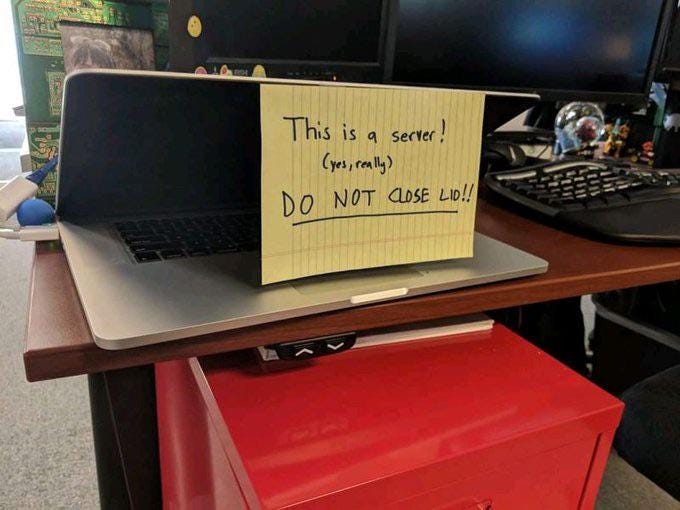

Apple Silicon is relatively new to the field but already boasts some impressive performance when it comes to battery life and just raw power. Apple Silicon’s Unified Memory allows the GPU to access massive amounts of memory without needing to go through the CPU, allowing it to run models that are significantly larger than you’d normally see in a consumer device. This means that, given the right tooling, you should be able to at least load huge models or load large batches of data on the GPU. As far as tooling, PyTorch, TensorFlow, and even Jax can all run on Apple Silicon which means that you only need to make minor changes to use your laptop (like the one at the top of this article) as your own edge inference server!

What does this mean for practical applications? Well, the lower end Apple Silicon isn’t currently well suited for most ML tasks but the improvement has been extremely impressive. These M1 benchmarks from Sebastian Raschka show just how close Apple got with their first iteration.

As you can see, the M1 Ultra is very close to dedicated (albeit fairly old) GPUs such as the 1080Ti and some laptop versions of the 30 series GPUs. That’s not bad for a first effort especially when there’s still work to be done on the software side. The Apple M2 chips were announced in June 2023 so there aren’t really benchmarks for that chipset yet but I would expect a huge improvement in inference time, especially with the benefit that the unified memory brings to inference and training.

Right now, it’s looking like Apple Silicon could be a strong contender for cost-effective and space efficient computer vision edge nodes in the future! Their improvement has been extremely impressive and it’s hard to see what parts of ML this wouldn’t impact! I would keep an eye out for this one. People might just be setting up Apple laptops as their edge nodes in the near future!

Conclusion

The ARM architecture got quite a bit of coverage a few years ago when Apple made the switch to Apple Silicon and for good reason. It’s led to some impressive applications like in the case of new Apple computers and allows for some handy edge node use cases such as the ability to turn a drone into your own flying inference server with an Nvidia Jetson. Let us not forget that almost everyone has their own edge node in their pocket with the ability to run computer vision models with negligible latency. I am personally excited about these improvements in the capabilities of devices such as Jetsons, Apple Silicon powered computers, and smartphones. These devices allow for some fun niche applications that may not have been possible otherwise due to the combination of small form-factor, low power draw, and high-ish performance in ML tasks.

In the next part of this series, I will be covering the different consumer and enterprise grade hardware available for machine learning.